Data alignment in multimodal AI is a fundamental concept that ensures the seamless integration and interaction of data from multiple modalities, such as text, images, audio, and video. This process is crucial for creating systems that can effectively understand and generate responses across different types of data inputs.

Multimodal AI systems aim to mimic human-like understanding by processing and interpreting information from diverse sources. To achieve this, data alignment organizes and synchronizes these various data types in a coherent manner. A well-aligned multimodal dataset allows an AI model to understand the context and relationships between different modalities, thereby enhancing its overall performance and accuracy.

Consider an example where a multimodal AI is used for a product recommendation system that analyzes user reviews (text), product images, and video demonstrations. For such a system to function optimally, it needs to align these data types effectively. Textual descriptions and user sentiments must be paired with corresponding visual and auditory data, enabling the model to form a comprehensive understanding of the product’s features and user preferences.

One of the primary challenges in data alignment is ensuring that the temporal and spatial aspects of different data types are synchronized. For instance, in a video where a person is speaking, the audio must be aligned with the corresponding visual frames to maintain the context of the spoken words. This alignment is critical in applications like automatic video transcription or real-time language translation.

Data alignment also plays a pivotal role in training multimodal AI models. During the training phase, aligned data helps the model learn how different modalities interact and how to leverage them for improved decision-making. This can lead to more robust models capable of handling complex tasks, such as emotion recognition from facial expressions, speech tone, and textual analysis simultaneously.

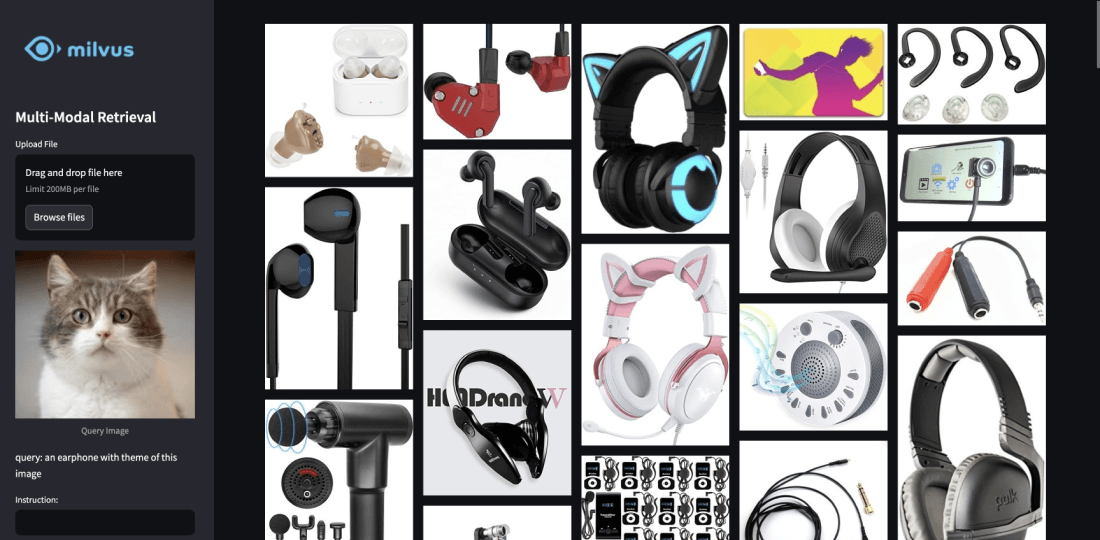

Moreover, data alignment facilitates the development of cross-modal retrieval systems, where a query in one modality (e.g., text) retrieves relevant information in another (e.g., images). This is particularly useful in search engines and digital asset management systems that need to manage and retrieve diverse data types efficiently.

In conclusion, data alignment is a cornerstone of multimodal AI, enabling the integration and coherent interpretation of multiple data types. By ensuring that different modalities are synchronized and contextually connected, data alignment enhances the AI’s ability to generate meaningful insights and provide more accurate, context-aware outcomes. This capability is essential for the development of advanced AI applications that require a holistic understanding of complex, multimodal data environments.