Building image search systems typically involves three core components: feature extraction, indexing/storage, and similarity search. Each step relies on specific tools and frameworks to handle the unique challenges of processing and querying visual data. Below is a breakdown of common tools and their roles in creating such systems.

For feature extraction, deep learning frameworks like TensorFlow or PyTorch are widely used to train or fine-tune models that convert images into numerical embeddings (feature vectors). Pre-trained models such as ResNet, EfficientNet, or CLIP are often employed to generate these embeddings without requiring custom training. Open-source libraries like OpenCV help with preprocessing tasks (e.g., resizing, normalization) before feeding images into models. Tools like ONNX Runtime or TensorRT optimize inference speed for production use. For example, a system might use PyTorch to load a CLIP model, process an image, and output a 512-dimensional vector representing its content.

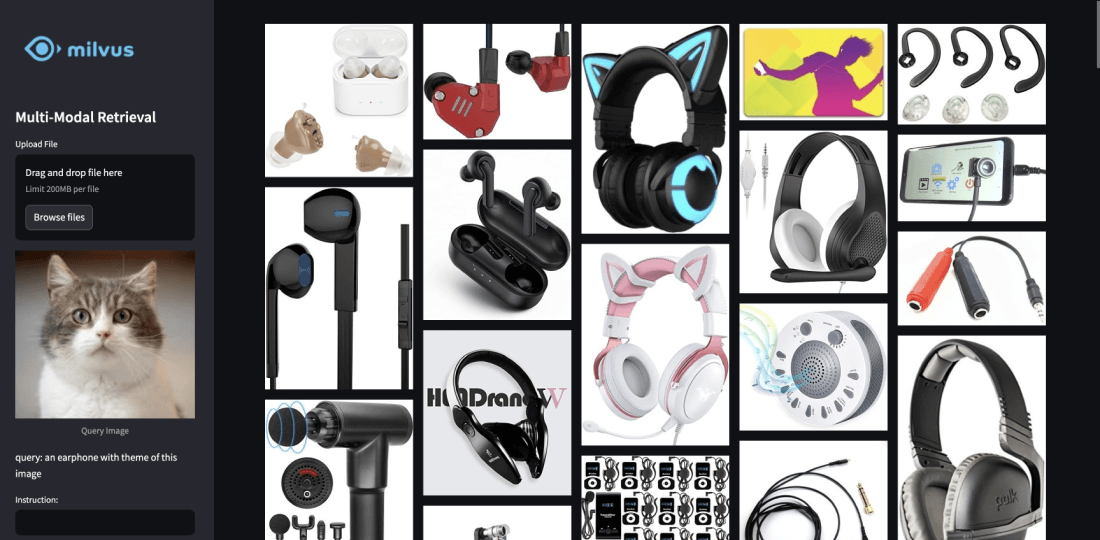

Next, indexing and storage tools manage the embeddings and enable efficient similarity searches. FAISS (Facebook AI Similarity Search) is a popular library for indexing high-dimensional vectors and performing fast nearest-neighbor searches. For scalable storage, databases like Elasticsearch (with vector search plugins) or Milvus (a dedicated vector database) handle large datasets. PostgreSQL with the pgvector extension is another option for smaller-scale systems. For instance, FAISS can index millions of embeddings in memory, allowing queries to find similar images in milliseconds. Milvus adds distributed architecture and support for multiple search algorithms like IVF_FLAT or HNSW, which balance speed and accuracy.

Finally, backend and API layers integrate these components into a usable system. Frameworks like Flask or FastAPI create REST APIs to accept image queries, process them through the pipeline, and return results. Message brokers like RabbitMQ or Kafka manage asynchronous tasks (e.g., batch indexing). Deployment tools like Docker and Kubernetes containerize services for scalability. A typical workflow might involve a FastAPI endpoint receiving an image, extracting its embedding via PyTorch, querying FAISS for matches, and returning image IDs from a PostgreSQL database. Cloud services like AWS SageMaker or Google Vertex AI can also streamline model deployment and scaling.